We will define 2 services:

- an apache server

- a Postgresql server

LVM will be used for all storage stuff.

As we really don't want data corruption, we will use the Stonith (for shooting the node which seems dead).

We will use the Ilo card for the STONITH.

wget http://heanet.dl.sf.net/sourceforge/open-ha-cluster/openha-0.3.2-0.i386.rhel3.0.rpm rpm -i openha-0.3.2-0.i386.rhel3.0.rpmor

rpm -i http://heanet.dl.sf.net/sourceforge/open-ha-cluster/openha-0.3.2-0.i386.rhel3.0.rpmIn your .bashrc or .profile or ..., initialize the EZ variable:

export EZ=/usr/local/cluster [ -f $EZ/env.sh ] && . $EZ/env.sh

kilocat3 net eth0 238.3.3.3 2383 10 kilocat3 net eth1 238.3.3.5 2385 10 kilocat3 disk /dev/raw/raw1 0 10 kilocat4 net eth0 238.3.3.4 2384 10 kilocat4 net eth1 238.3.3.6 2386 10 kilocat4 disk /dev/raw/raw1 2 10On kilocat3, heartbeat will be sent through:

$EZ_BIN/service -a TST /bin/true kilocat3 kilocat4 /bin/trueThe service characteristics:

$EZ/ezha startThen check the service status:

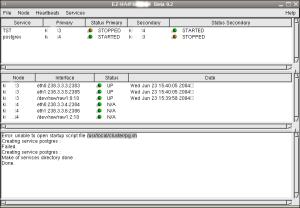

$EZ_BIN/service -s 1 service(s) defined: Service: TST Primary : kilocat3, STARTED Secondary: kilocat4, STOPPEDor, easyier, use the Gui:

$EZ_BIN/guiNow, we can play with this first service, for ex:

$EZ_BIN/service -A TST freeze-stop- then:

$EZ_BIN/service -s- on kilocat3:

$EZ_BIN/service -A TST unfreeze- then stop the openha process on kilocat4, to see whats happening...

#!/bin/sh # Checkup script for the PGSQL service: # if the state of the service is UNKNOWN on the other node, shoot it. OTHERNODE=kilocat4 STATUS=`$EZ_BIN/service -s|egrep "$OTHERNODE.*UNKNOWN"|wc -l` /bin/ping -c 1 $OTHERNODE > /dev/null 2>&1 PINGING=$? if [ $STATUS -eq 1 -a $PINGING -eq 0 ] ; then #The service on the node seems to be down, but still pinging: #for security, shoot it $EZ/shoot.sh $OTHERNODE if [ $? -ne 0 ] ; then #problem with stonith, don't move exit -1 else #The service on the node has a "valid" state. #Nothing to do exit 0 fi fi

#!/bin/sh # STONITH script echo "Bang Bang Bang: $1 is dead. RIP." exit 0The pg.sh script:

#!/bin/sh

# Start/stop script for the PGSQL service:

INTERFACE=eth0:4

ADDR=184.65.17.91

NETMASK=255.255.248.0

BROADCAST=184.65.17.255

VG=pgvg

VOL=lvol1

FS=/pgsql

STARTUP_SCRIPT=/etc/init.d/rhdb

usage ()

{

echo "Usage: $0 {start|stop|restart}"

RETVAL=1

}

start ()

{

#Network Part

/bin/ping -c 1 $ADDR > /dev/null 2>&1

PINGING=$?

if [ $PINGING -eq 0 ] ; then

#The virtual IP address already ping, not normal ...

echo "Problem: address $ADDR seems to be up !!!"

exit -1

else

/sbin/ifconfig $INTERFACE $ADDR netmask $NETMASK broadcast $BROADCAST up

STATUS=$?

if [ $STATUS -ne 0 ];then

echo "Problem for plumbing interface $INTERFACE"

exit $STATUS

else

echo "Interface $INTERFACE successfully plumbed"

fi

fi

#File system part

/sbin/vgchange -ay $VG

/bin/mount /dev/$VG/$VOL $FS

STATUS=$?;

if [ $STATUS -ne 0 ];then

echo "Problem for mounting $FS"

/sbin/vgchange -an $VG

/sbin/ifconfig $INTERFACE down

exit -3

fi

#Application start

/etc/init.d/rhdb start

if [ $STATUS -ne 0 ];then

echo "Problem for starting service Postgresql"

/sbin/vgchange -an $VG

/sbin/ifconfig $INTERFACE down

exit -3

else

exit 0

fi

}

stop ()

{

#Application stop

/etc/init.d/rhdb stop

STATUS=$?

if [ $STATUS -ne 0 ];then

echo "Problem for stopping service Postgresql"

exit $STATUS

fi

#File system part

/bin/umount $FS

STATUS=$?;

if [ $STATUS -ne 0 ];then

echo "Problem for umounting $FS"

exit $STATUS

fi

/sbin/vgchange -an $VG

STATUS=$?;

if [ $STATUS -ne 0 ];then

echo "Problem for desactivate LVM group $VG"

exit $STATUS

fi

/sbin/ifconfig $INTERFACE down

STATUS=$?;

if [ $STATUS -ne 0 ];then

echo "Problem for desactivate network interface $INTERFACE"

exit $STATUS

fi

echo "Service successfully stopped"

exit 0

}

restart ()

{

stop

start

}

case "$1" in

start) start ;;

stop) stop ;;

restart) restart ;;

*) usage ;;

esac

exit 0

We can now create the service:

$EZ_BIN/service -a postgres $EZ/pg.sh kilocat4 kilocat3 $EZ/check_pg.sh Creating service postgres : Make of services directory done Done.and check with the Gui: